the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Spatial probabilistic calibration of a high-resolution Amundsen Sea Embayment ice sheet model with satellite altimeter data

Andreas Wernecke

Tamsin L. Edwards

Isabel J. Nias

Philip B. Holden

Neil R. Edwards

Probabilistic predictions of the sea level contribution from Antarctica often have large uncertainty intervals. Calibration of model simulations with observations can reduce uncertainties and improve confidence in projections, particularly if this exploits as much of the available information as possible (such as spatial characteristics), but the necessary statistical treatment is often challenging and can be computationally prohibitive. Ice sheet models with sufficient spatial resolution to resolve grounding line evolution are also computationally expensive.

Here we address these challenges by adopting and comparing dimension-reduced calibration approaches based on a principal component decomposition of the adaptive mesh model BISICLES. The effects model parameters have on these principal components are then gathered in statistical emulators to allow for smooth probability density estimates. With the help of a published perturbed parameter ice sheet model ensemble of the Amundsen Sea Embayment (ASE), we show how the use of principal components in combination with spatially resolved observations can improve probabilistic calibrations. In synthetic model experiments (calibrating the model with altered model results) we can identify the correct basal traction and ice viscosity scaling parameters as well as the bedrock map with spatial calibrations. In comparison a simpler calibration against an aggregated observation, the net sea level contribution, imposes only weaker constraints by allowing a wide range of basal traction and viscosity scaling factors.

Uncertainties in sea level rise contribution of 50-year simulations from the current state of the ASE can be reduced with satellite observations of recent ice thickness change by nearly 90 %; median and 90 % confidence intervals are 18.9 [13.9, 24.8] mm SLE (sea level equivalent) for the proposed spatial calibration approach, 16.8 [7.7, 25.6] mm SLE for the net sea level calibration and 23.1 [−8.4, 94.5] mm SLE for the uncalibrated ensemble. The spatial model behaviour is much more consistent with observations if, instead of Bedmap2, a modified bedrock topography is used that most notably removes a topographic rise near the initial grounding line of Pine Island Glacier.

The ASE dominates the current Antarctic sea level contribution, but other regions have the potential to become more important on centennial scales. These larger spatial and temporal scales would benefit even more from methods of fast but exhaustive model calibration. Applied to projections of the whole Antarctic ice sheet, our approach has therefore the potential to efficiently improve our understanding of model behaviour, as well as substantiating and reducing projection uncertainties.

- Article

(1984 KB) -

Supplement

(1719 KB) - BibTeX

- EndNote

The Antarctic ice sheet is currently losing mass at a rate of around 0.5 to 0.6 mm global mean sea level equivalent per year (mm SLE a−1), predominantly in the Amundsen Sea Embayment (ASE) area of the West Antarctic Ice Sheet (WAIS) (Shepherd et al., 2018; Bamber et al., 2018). This is due to the presence of warm circumpolar deep water causing sub-shelf melting and ice dynamical changes including retreat of the grounding line that divides grounded from floating ice (Khazendar et al., 2016). However, the future response of the Antarctic ice sheet to a changing climate is one of the least well understood aspects of climate predictions (Church et al., 2013). Predictions of the dynamic ice sheet response are challenging because local physical properties of the ice and the bedrock it is laying on are poorly observed. Parameterisations of unresolved physical processes are often used and need to be validated (DeConto and Pollard, 2016; Edwards et al., 2019; Cornford et al., 2015; Pattyn et al., 2017). Progress has been made in the development of numerical models with higher resolutions and improved initialisation methods (Pattyn, 2018). But these improvements cannot yet overcome the challenges of simulating what can be described as an underdetermined system with more unknowns than knowns. For this reason, some studies use parameter perturbation approaches which employ ensembles of model runs, where each ensemble member is a possible representation of the ice sheet using a different set of uncertain input parameter values (Nias et al., 2016; DeConto and Pollard, 2016; Schlegel et al., 2018; Gladstone et al., 2012; Ritz et al., 2015; Bulthuis et al., 2019) (In this context “input parameters” can refer to initial values of state variables, which will change during the simulation, or model parameters, which represent physical relationships. All of those quantities can be poorly known and contribute to uncertainties in predictions.). In most studies, the computational expense of exploring uncertainties either restricts the minimum spatial resolution to several kilometres, causing challenges in representing the grounding line, or else restricts the application to the regional scale. One exception is the ensemble by Nias et al. (2016), which uses the adaptive mesh model BISICLES at sub-kilometre minimum resolution over the ASE domain (Pine Island, Thwaites, Smith and Pope glaciers).

In Antarctic ice sheet model ensemble studies, the projected sea level contribution for high emission scenarios by the end of the century typically ranges from about zero to about 40 cm; i.e. the ensemble spread (∼40 cm) is twice the predicted (mean/median) contribution (∼20 cm) (Edwards et al., 2019). It is therefore essential to constrain ice sheet model parameters to reduce these uncertainties in order to attain sharper and more distinctive prediction distributions for different climate scenarios. In other words, the uncertainties are of the same order of magnitude as the projections themselves; hence the reduction of uncertainty is essential to quantify projections effectively. Statistical calibration of model parameters refines predictions by using observations to judge the quality of ensemble members, in order to increase confidence in, and potentially reduce uncertainty in, the predicted distributions. Calibration approaches range from straightforward tuning to formal probabilistic inference. Simple ruled out/not ruled out classifications (also called history matching or pre-calibration) can be used to identify and reject completely unrealistic ensemble members while avoiding assumptions about the weighting function used for the calibration (e.g. Holden et al., 2010; Williamson et al., 2017; Vernon et al., 2010). Formal probabilistic, or Bayesian, calibrations using high dimensional datasets require experience of statistical methods and can be computationally prohibitive (Chang et al., 2014). There are few ice sheet model studies using calibrations, among which are history matching (DeConto and Pollard, 2016; Edwards et al., 2019), gradual weight assignments (Pollard et al., 2016) and more formal probabilistic treatments (Ritz et al., 2015; Chang et al., 2016a, b). Most use one or a small number of aggregated summaries of the observations, such as spatial and/or temporal averages, thus discarding information that might better constrain the parameters. Ideally, then, calibrating a computer model with observations should use all available information rather than aggregating the observations with spatio-temporal means.

However, the formal comparison of model simulations with two-dimensional observations, such as satellite measurements of Antarctica, poses statistical challenges. Measurements of the earth system typically show coherent spatial patterns, meaning that nearby observations are highly correlated due to the continuity of physical quantities. Model-to-observation comparisons on a grid-cell-by-grid-cell basis can therefore not be treated as statistically independent. On the other hand, appropriate treatment of these correlations with the inclusion of a co-variance matrix in the statistical framework for calibration can be computationally prohibitive (Chang et al., 2014). While the simplest way to avoid this is by aggregation, either over the whole domain (Ritz et al., 2015; DeConto and Pollard, 2016; Edwards et al., 2019) or subsections assumed to be independent (Nias et al., 2019), a more sophisticated approach that preserves far more information is to decompose the spatial fields into orthogonal principal components (PCs) (Chang et al., 2016a, b; Holden et al., 2015; Sexton et al., 2012; Salter et al., 2018; Higdon et al., 2008). The decompositions are used as simplified representations of the original model ensemble in order to aid in predicting the behaviour of computationally expensive models, and in some cases to restrict flexibility of the statistical model in the parameter calibration so that the problem is computationally feasible and well-posed (Chang et al., 2016a, b). But the latter studies, which employ a formal probabilistic approach, still assume spatial and/or temporal independence at some point in the calibration. This independence assumption is not necessary if the weighting (likelihood) calculation is shifted from the spatio-temporal domain into that of principal component basis vectors, as proposed in Chang et al. (2014).

A further difficulty is the computational expense of Antarctic ice sheet models that have sufficient spatial resolution to resolve grounding line migration. This can be overcome by building an “emulator”, which is a statistical model of the response of a physically based computer model. Emulation allows a small ensemble of the original ice sheet model to be extended to a much larger number. This approach has recently been applied in projections of the Antarctic ice sheet contribution to sea level rise by interpolation in the input parameter space in general (Edwards et al., 2019; Chang et al., 2016a, b; Bulthuis et al., 2019) and melt forcing in particular (Levermann et al., 2014). Emulation becomes particularly important in model calibration, as this down-weights or rejects ensemble members and therefore reduces the effective ensemble size.

The aim of this study is to develop a practical, yet comprehensive calibration approach for data from the high-resolution ice sheet model BISICLES. This approach is compared to more traditional methods by means of a synthetic model test and the impact on probability density functions for the dynamic sea level contribution from 50-year simulations of the Amundsen Sea Embayment. We derive principal components of ice thickness change estimates with a singular value decomposition, thus exploiting more of the available information of satellite observations than previous studies. The statistical independence of those PCs aids the use of Bayesian (probabilistic) inference. We use emulation of the ice sheet model to ensure dense sampling of the input space and therefore smooth probability density functions.

In Sect. 2 we describe the ice sheet model and satellite observation data, followed by the introduction of the calibration approaches used and the benchmark procedure in Sect. 3. In Sect. 4 we present the benchmark results and probabilistic ice sheet simulation distributions, which are discussed in Sect. 5.

2.1 Ice sheet model ensemble

2.1.1 Ensemble setup

We use the ice sheet model ensemble published in Nias et al. (2016) using the adaptive mesh model BISICLES (Cornford et al., 2013) with equations from Schoof and Hindmarsh (2010). The mesh has a minimum spatial resolution of 0.25 km and evolves during the simulation. The model was run for the Amundsen Sea Embayment with constant climate forcing for 50 years with 284 different parameter configurations. Two uncertain inputs are varied categorically: two different bedrock elevation maps are used, as well as two different friction law exponents. The first bedrock elevation map is Bedmap2, which is based on an extensive compilation of observations (Fretwell et al., 2013), while the second was modified by Nias et al. (2016) in order to reduce unrealistic model behaviour. The modifications are primarily local (<10 km) and include the removal of a topographic rise near the initial grounding line of Pine Island Glacier. The friction law exponent defines the linearity of the basal ice velocity with basal traction, and values of 1 (linear) and 1∕3 (power law) have been used. In addition, three scalar parameters were perturbed continuously, representing amplitude scalings of (1) the ocean-induced basal melting underneath ice shelves (i.e. the floating extensions of the ice streams), (2) the effective viscosity of the ice, determining the dynamic response to horizontal strain, and (3) the basal traction coefficient representing bedrock–ice interactions and local hydrology. The default values for these three parameters were determined for initialisation by model inversion (Habermann et al., 2012; MacAyeal et al., 1995) of surface ice speeds from Rignot et al. (2011). For grounded ice the model inversion attempts to find the optimal combination of the two-dimensional fields of effective viscosity and basal traction coefficients for a given ice geometry to reproduce the before mentioned observed surface speed of the ice. It contains penalty terms to avoid overfitting but does not directly address apparent inconsistencies between the datasets, sometimes framed as violations to mass conservation. In other words, for a given combination of ice geometry and ice speed it is possible that the only way to satisfy mass conservation is by unrealistic, small-scale, high-amplitude rates of ice thickness change. These are typically caused by errors in either of the datasets, but interpolation and locally inappropriate model assumptions can contribute as well. The modified bedrock by Nias et al. (2016) is designed to reduce those inconsistencies.

The scaling parameters are subsequently perturbed between half and double the default values in a Latin hypercube design by Nias et al. (2016). Different default basal traction coefficient fields have been found for each combination of bed topography and friction law, while the default viscosity field only differs between bed geometries (but not friction laws). We use the normalised parameter ranges with halved, default and doubled scaling factors mapped to 0, 0.5 and 1, respectively.

2.1.2 Ensemble behaviour

The ensemble covers a wide range of sea level rise contributions for the 50-year period, with the most extreme members reaching −0.19 and 1.62 mm SLE a−1, respectively. About 10 % of the ensemble shows an increasing volume above flotation (negative sea level contribution), and the central runs (0.5 for traction, viscosity and ocean melt parameters) contribute 0.27 mm SLE a−1 (linear friction) and 0.26 mm SLE a−1 (non-linear friction). The average contributions are generally reasonably close to satellite observations (0.33±0.05 mm SLE a−1 from 2010 to 2013, McMillan et al., 2014), with 0.30 mm SLE a−1 for linear friction and modified bedrock, 0.37 mm SLE a−1 for linear friction and Bedmap2, 0.38 mm SLE a−1 for non-linear friction and modified bedrock, and 0.51 mm SLE a−1 for non-linear friction and Bedmap2 (Nias et al., 2016).

We allow for a short spin-up phase of 3 years (selected by manual inspection) for the model to adjust to the perturbations. The following 7 years are used as the calibration period; therefore the temporal mean of the ice thickness change from year 4 to year 10 (inclusive) of the simulations will be compared with satellite observations which also span a 7-year period.

Other spin-up and calibration periods have been tested and show small impact on the results for calibrations in basis representation. For example the median for the basis calibration of the sea level contribution at the end of the simulations is 18.9 mm SLE with the described 3-year spin-up and 7-year calibration period and 19.1 mm SLE for a 7-year spin-up followed by a short 3-year calibration period. We further tested the 3-year spin-up with a 4-year calibration period and other calibration approaches (see Supplement).

We regrid the simulated ice thickness change fields for this period to the same spatial resolution as the observations (10 km×10 km) by averaging. Estimates of the sea level rise contribution at the end of the model period (50 years), used to illustrate the impact of calibrations on simulations of the future, are calculated directly on the model grid. We use the same catchment area mask as in Nias et al. (2016).

The simulations used here are not intended to be predictions of the future but instead project the current state of the ASE glacial system with a constant recent-past climate forcing and perturbed parameters into the future. No changes in the climate are represented in the ensemble. End-of-simulation sea level contribution distributions are presented to illustrate and compare the value of calibrations and should not be understood as best estimates of future sea level contribution. For a full description of the model ensemble see Nias et al. (2016).

2.2 Observations

The calibration target is based on a compilation of five satellite altimeter datasets of surface elevation changes from 1992 to 2015 by Konrad et al. (2017). The synthesis involves fitting local empirical models over spatial and temporal extents of up to 10 km and 5 years, respectively, as developed by McMillan et al. (2014). The satellite missions show high agreement, with a median mismatch of 0.09 m a−1. The dataset has a resolution of 10 km×10 km spatially and 6 months temporally. Only the last 7 years (beginning of 2008 to beginning of 2015) of the dataset are used here for calibration. The following satellite missions contributed to this period: ERS-2 (until 2011), Envisat (until 2012), ICESat (until 2009) and CryoSat-2 (2010 to 2015). All of these carry radar altimeters, with the only exception being ICESat, which had a laser altimeter (lidar) as payload.

There is no exact start date of the simulations, which makes a dating of the calibration period difficult. However, the ice flow observations from Rignot et al. (2011) used for the ice sheet initialisation are largely from a 3-year period centred around 2008, which is why this is the first year of surface elevation change observations we use. We do not correct for possible changes in firn thickness and directly convert surface elevation change rates of grounded ice into rates of ice thickness change. An average of all fourteen 6-month intervals is used for calibration; however for one calibration approach the averaging is performed in basis representation (see Sect. 3.2 for details).

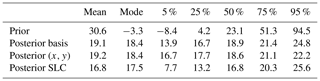

In the following we propose a new ice sheet model calibration approach, as outlined in Fig. 1. It will be tested in Sect. 3.5 and compared to alternative approaches in Sect. 3.6. This calibration approach consists of an initial spatial decomposition of the model data into principal components (PCs), which strongly simplifies subsequent emulation and calibration. In particular it helps to adequately represent spatial correlation and avoid unnecessary loss of information (e.g. by comparing total or mean model–observation differences). Emulation – statistical modelling of the ice sheet model – helps to overcome computational constraints and to refine probability density functions. We construct a spatial emulator for ice thickness change in the calibration period to represent the two-dimensional model response. In this way we predict how BISICLES would behave for additional perturbed-parameter runs, and we use the much larger emulator ensemble in the subsequent calibration instead of the original BISICLES ensemble. The calibration then infers model parameter values which are likely to lead to good representations of the ice sheet. These parameter probabilities are used as weights for a second, non-spatial emulator to represent the total sea level rise at the end of the 50-year simulations.

Figure 1Flow diagram of the proposed calibration procedure. Horizontal boxes represent steps in the analysis, diamonds represent observations and numbers refer to corresponding sections in this study.

3.1 Principal component decomposition

Let y(θi) be the m dimensional spatial model ice thickness change output for a parameter setting θi, where m is the number of horizontal grid cells, and the model ensemble has n members so that , being the whole set of input parameters, spanning in our case the d=5 dimensional model input space. The m×n matrix is the row-centred combined model output of the whole Nias et al. (2016) ensemble with the ith column consisting of y(θi) minus the mean of all ensemble members, , and each row represents a single location. In the following we will assume n<m. A principal component decomposition is achieved by finding U, S and V so that

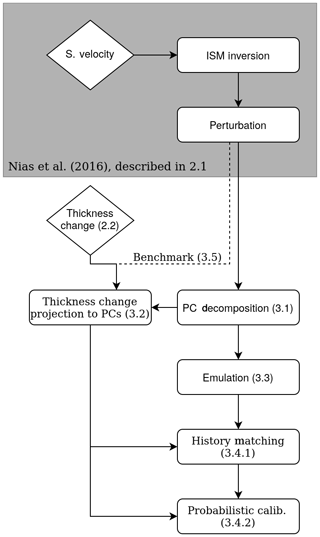

where the m×n rectangular diagonal matrix S contains the n positive singular values of , and U and VT are unitary. The rows of VT are the orthonormal eigenvectors of , and the columns of U are the orthonormal eigenvectors of . In both cases the corresponding eigenvalues are given by diag(S)2. By convention U, S and VT are arranged so that the values of diag(S) are descending. We use B=US as shorthand for the new basis and call the ith column of B the ith principal component. The first five principal components have been normalised for Fig. 2 to show more detail of the spatial pattern.

The fraction of ensemble variance represented by a principal component is proportional to the corresponding eigenvalue of U, and typically there is a number k<n for which the first k principal components represent the whole ensemble sufficiently well. We choose k=5 so that 90 % of the model variance is captured (Fig. 2).

with B′ and V′ consisting of the first k columns of B and V.

This truncation limits the rank of to k=5. The PCs are by construction orthogonal to each other and can be treated as statistically independent.

Figure 2The first five normalised PCs of the model ice thickness change fields, building an orthogonal basis. They represent the main modes of variation in the model ensemble and are unitless since normalised. The lower left graph shows the fraction of total variance represented by each PC individually (grey) and cumulatively (red), based on squared singular values.

3.2 Observations in basis representation

One of the calibration approaches we investigate uses the PCs derived before for both the model and observations (see Sect. 3.4). For this we have to put the observations onto the same basis vectors (PCs) as the model data. Spatial m dimensional observations z(xy) can be transformed to the basis representation by

for z(xy) on the same spatial grid as the model output y(θ), which has the mean model output subtracted for consistency.

We perform the transformation as in Eq. (3) for all of the biyearly observations over a 7-year period to get 14 different realisations of . Due to the smooth temporal behaviour of the ice sheet on these timescales we use the observations as repeated observations of the same point in time to specify as the mean and use the variance among the 14 realisations of to define the observational uncertainty in the calibration model (Sect. 3.4).

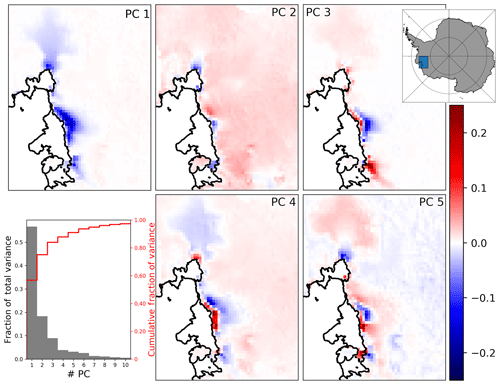

Figure 3(a) Mean observed ice thickness change from 2008 to 2015 based on data from Konrad et al. (2017). (b) As (a) but projected to first five PCs and reprojected to spatial field.

Figure 3 shows that large parts of the observations can be represented by the first five PCs from Fig. 2. This is supported by the fact that the spatial variance (Var()) of the difference between the reprojected and original fields is substantially smaller than from z(xy) alone:

It is only the part of the observations which can be represented by five PCs (right of Fig. 3) which will influence the calibration.

3.3 Emulation

For a probabilistic assessment we need to consider the probability density in the full, five-dimensional parameter space. This exploration can require very dense sampling of probabilities in the input space to ensure appropriate representation of all probable parameter combinations. This is especially the case if the calibration is favouring only small subsets of the original input space. In our case more than 90 % of the calibrated distribution would be based on just five BISICLES ensemble members. For computationally expensive models sufficient sampling can be achieved by statistical emulation, as laid out in the following.

A row of can be understood as indices of how much of a particular principal component is present in every ice sheet model simulation. Emulation is done by replacing the discrete number of ice sheet model simulations by continuous functions or statistical models. We use each row of , combined with Θ, to train an independent statistical model where the mean of the random distribution at θ is denoted ωi(θ). Here the training points are noise-free as the emulator is representing a deterministic ice sheet model, and therefore for principal components . Each of those models can be used to interpolate (extrapolation should be avoided) between members of Θ to predict the ice sheet model behaviour and create surrogate ensemble members.

We use Gaussian process (GP) models, which are a common choice for their high level of flexibility and inherent emulation uncertainty representation (Kennedy and O'Hagan, 2001; O'Hagan, 2006; Higdon et al., 2008). The random distribution of a Gaussian process model with noise-free training data at a new set of input values θ* is found by (e.g. Rasmussen and Williams, 2006)

where represents a multivariate normal distribution and the values of are derived from evaluations of the GP covariance function . Equivalent definitions are used for , and ; note that is a 1×1 matrix if we emulate one new input set at a time. We use a Matern () type function for which describes the covariance based on the distance between input parameters. Coefficients for (also called hyper-parameters), including the correlation length scale, are optimised on the marginal likelihood of ω(Θ) given the GP. We refer to Rasmussen and Williams (2006) for an in-depth discussion and tutorial of Gaussian process emulators.

Due to the statistical independence of the principal components we can combine the k GPs to

The combined Ω is in the following called emulator and ω(θ), and the entries of the diagonal matrix Σω(θ) follow from Eq. (4). We use the python module GPy for training (GPRegression()) and marginal likelihood optimisation (optimize_restarts()). In total we generate more than 119 000 emulated ensemble members. Emulator estimates of ice sheet model values in a leave-one-out cross-validation scheme are very precise with squared correlation coefficients for both emulators of R2>0.988 (see Supplement for more information).

3.4 Calibration model

Given the emulator in basis representation, a calibration can be performed either after reprojecting the emulator output back to the original spatial field (e.g. Chang et al., 2016a; Salter et al., 2018) or in the basis representation itself (e.g. Higdon et al., 2008; Chang et al., 2014). Here we will focus on the PC basis representation.

We assume the existence of a parameter configuration θ* within the bounds of Θ (the investigated input space) which leads to an optimal model representation of the real world. To infer the probability of any θ to be θ* we rely on the existence of observables, i.e. model quantities z for which corresponding measurements are available. We follow Bayes' theorem to update prior (uninformed) expectations about the optimal parameter configuration with the observations to find posterior (updated) estimates. The posterior probability of θ being the optimal θ* given the observations is

where is the likelihood of the observables to be as they have been observed under the condition that θ is θ*, and π(θ) is the prior (uninformed) probability that . Following Nias et al. (2016) we choose uniform prior distributions in the scaled parameter range [0,1] (see also Sect. 2 and Eq. 11 in Nias et al., 2016). The emulator output is related to the real state of the ice sheets in basis representation, γ, by the model discrepancy ε:

We assume the model discrepancy to be multivariate Gaussian distributed with zero mean; . The observables are in turn related to γ by

where e is the spatial observational error and the transformation follows from Eq. (3).

We simplify the probabilistic inference by assuming the model error/discrepancy ε, the model parameter values Θ, and observational error e to be mutually statistically independent and e to be spatially identically distributed with variance , so that

The k×k matrix is diagonal with the element-wise inverse of diag as diagonal values. We estimate from the variance among the 14 observational periods for the first principal component constituting ; i.e.

Note that the existence of γ is an abstract concept, implying that it is only because of an error ε that we cannot create a numerical model which is equivalent to reality. However abstract, it is a useful, hence common, statistical concept allowing us to structure expectations of model and observational limitations (Kennedy and O'Hagan, 2001). Neglecting model discrepancy, whether explicitly by setting ε=0, or implicitly, would imply that an ice sheet model can make exact predictions of the future once the right parameter values are found. This expectation is hard to justify considering the assumptions which are made for the development of ice sheet models, including sub-resolution processes. Neglecting model discrepancy typically results in overconfidence and potentially biased results.

The inclusion of model discrepancy can at the same time lead to identifiability issues where the model signal cannot be distinguished from the imposed systematic model error. Constraints on the spatial shape of the discrepancy have been used to overcome such issues (Kennedy and O'Hagan, 2001; Higdon et al., 2008). An inherent problem with representing discrepancy is that its amplitude and spatial shape are in general unknown. If the discrepancy were well understood the model itself or its output could be easily corrected. Even if experts can specify regions or patterns which are likely to show inconsistent behaviour, it cannot be assumed that these regions or patterns are the only possible forms of discrepancy. If its representation is too flexible, it can however become numerically impossible in the calibration step to differentiate between discrepancy and model behaviour.

For these reasons we choose a rather heuristic method which considers the impact of discrepancy on the calibration directly and independently for each PC. Therefore Σε is diagonal with diag. The three-sigma rule states that at least 95 % of continuous unimodal density functions with finite variance lie within three standard deviations from the mean (Pukelsheim, 1994). For the ith PC we therefore find so that 95 % of the observational distribution lies within 3σi95 from the mean of ωi(Θ), i.e. across the n ensemble members. We further note that we do not know the optimal model setup better than we know the real state of the ice sheet and set the minimum discrepancy to the observational uncertainty. Hence .

We thereby force the observations to fulfil the three-sigma rule by considering them as part of the model distribution ωi(Θ) while avoiding overconfidence in cases where observations and model runs coincide.

3.4.1 History matching

Probabilistic calibrations search for the best input parameters, but stand-alone probabilistic calibrations cannot guarantee that those are also good input parameters in an absolute sense. While “good” is subjective, it is possible to define and rule out implausible input parameters. The implausibility parameter is commonly defined as (e.g. Salter et al., 2018)

with . A threshold on ℐ(θ) can be found using the 95 % interval of a chi-squared distribution with k=5 degrees of freedom. Therefore we rule out all θ with ℐ(θ)>11. By adding this test, called history matching, we ensure that only those input parameters are used for a probabilistic calibration which are reasonably close to the observations. In the worst case the whole input space could be ruled out, forcing the practitioner to reconsider the calibration approach and uncertainty estimates. Here about 1.4 % of the parameter space cannot be ruled out.

3.4.2 Probabilistic calibration

For all θ which have not been ruled out, the likelihood follows from Eqs. (5), (7), (8) and (9):

The calibration distribution in Eq. (6) can be evaluated using Eq. (12) with a trained emulator (Eq. 4), observational (Eq. 10) and model discrepancy (above), and the prior parameter distributions π(θ) set by expert judgement.

3.5 Calibration model test

In this section we test our calibration approach on synthetic observations to see whether our method is capable of finding known-correct parameter values. We select one member of the BISICLES model ensemble at a time and add 14 different realisations of noise to it. The noise is added to see how the calibration performs if the observations cannot be fully represented by the ice sheet model.

We use spatially independent, zero-mean, normally distributed, random noise with variance equal to the local variance from the 14 periods of satellite observations. This way the variance incorporates dynamic changes (acceleration/deceleration of the ice thickness change) and technical errors (e.g. measurement and sampling errors). For each selected model run we generate 14 noise fields and add them to the single model ice thickness change field. These 14 realisations replace the 14 periods of satellite observations for the synthetic model tests.

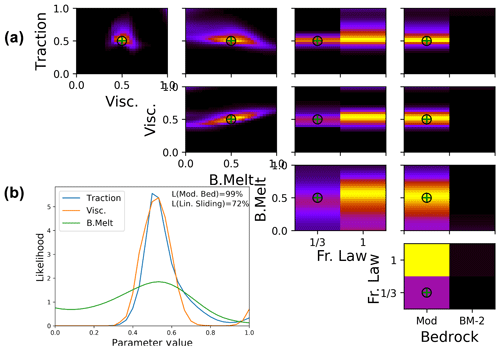

For Fig. 4 the model run with central parameter values (=0.5) for basal traction, viscosity and ocean melt scaling factors, non-linear friction, and modified bedrock has been selected, as indicated by black circles. This parameter set has been selected as it highlights the limitations of the calibration; the results of 11 other synthetic model tests are shown in the Supplement.

Figure 4Likelihood of parameter combinations of the synthetic test case (evaluations of Eq. 12). Upper right panels show likelihood values marginalised to pairs of parameters including basal melt factor (B. Melt) and friction law exponent (Fr. Law), normalised to the respective maximum for clarity. Lower left panel shows likelihood values marginalised to individual parameters for the three scalar parameters (line plots), as well as the friction law exponent and bedrock topography map (labelled as L(Lin. Sliding) and L(Mod. Bed) respectively), normalised to an integral of one, consistent with probability density functions. The central values for traction, viscosity, and ocean melt as well as non-linear friction and modified bedrock are used. The parameter values are also shown by the black circles, while the values of the set of parameters with highest likelihood are shown by green crosses.

Figure 4 illustrates which parts of the model input space are most successful in reproducing the synthetic observations of ice thickness changes during the calibration period. For visualisation we collapse the five-dimensional space onto each combination of two parameters and show how they interact. For a likely (yellow) area in Fig. 4 it is not possible to see directly what values the other three parameters have, but very unlikely (black) areas indicate that no combination of the remaining parameter values results in model configurations consistent with observations.

As can be seen from Fig. 4, marginal likelihoods of our calibration approach can favour linear friction even if the synthetic observations use non-linear friction. In addition, the ocean melt parameter is often weakly constrained or, as in this case, biased towards small melt factors. In contrast, the basal traction coefficient and viscosity scaling factors have a strong mode at, or close to, the correct value of 0.5, and the correct bedrock map can always be identified (Fig. 4 and Supplement). Different values of basal traction and viscosity have been tested in combination with both bedrock maps and show similar performance (see Supplement). The fact that the parameter setup used for the test is attributed to the maximal likelihood (green cross on top of black circle) supports our confidence in the implementation as the real parameter set is identified correctly as the best fit. Relative ambiguity with respect to friction law and ocean melt overrules the weak constraints on these parameters in the marginalised likelihoods. The higher total likelihood of linear friction can be traced back to a higher density of central ensemble members for linear friction. Non-linear friction produces more extreme ice sheet simulations as simulations with high velocities will have reduced (compared to linear friction) basal drag and speed up even more (and vice versa for simulations with slow ice flows). The frequency distribution of total sea level contribution and basis representation are therefore wider for non-linear friction (see Supplement). The relative density of ensemble members around the mode of the frequency distribution can, as for this test case, cause a smaller marginal likelihood for non-linear friction compared to linear friction (28 % to 72 %). This can be considered a caveat of the model ensemble which might very well be present in other ensembles which perturb the friction law in combination with other parameters. If the friction law cannot be adequately constrained, as is the case for all calibration approaches tested here, the prior belief in the optimal friction law must be set very carefully.

The signal of friction law and ocean melt is not strong enough to adequately constrain the calibration, even though both parameters are known to have a strong impact on the ice sheet (Pritchard et al., 2012; Arthern and Williams, 2017; Jenkins et al., 2018; Joughin et al., 2019; Brondex et al., 2019). This is likely related to the slower impact of those parameters compared to the others. A change in bedrock, basal traction or viscosity has a much more immediate effect on the ice dynamics. For example, if the basal traction field is halved, the basal drag will be reduced by the same amount, leading to a speed-up of the ice at the next time step (via the solution of the stress balance). The perturbation of ocean melt from the start of the model period has to significantly change the ice shelf thickness before the ice dynamics upstream are affected. The initialisation of the ensemble has been performed for each friction law individually, which means that the initial speed of the ice is by design equivalent. It is only after the ice velocities change that the different degrees of linearity in the friction law have any impact on the simulations. This does not mean that the simulations are insensitive to the ocean melt forcing and friction law; in fact Fig. 4 shows that both parameters have some impact on the simulation in the calibration period. It just means that the much more immediate effects of basal traction and viscosity are likely to dominate the calibration on short timescales.

From this test we conclude that basal friction law and ocean melt scaling cannot be inferred with this calibration approach and calibration period. We will therefore only calibrate the bedrock as well as basal traction and viscosity scaling factors. Several studies used the observed dynamical changes of parts of the ASE to test different friction laws. Gillet-Chaulet et al. (2016) find a better fit to evolving changes of Pine Island Glacier surface velocities for smaller m, reaching a minimum of the cost function from around and smaller. This is supported by Joughin et al. (2019), who find to capture the PIG speed-up from 2002 to 2017 very well, matched only by a regularised Coulomb (Schoof) friction law. It further is understood that parts of the ASE bed consist of sediment-free, bare rocks for which a linear Weertman friction law is not appropriate (Joughin et al., 2009). We therefore select non-linear friction by expert judgement and use a uniform prior for the ocean melt scaling.

3.6 Comparison with other calibration approaches

To put the likelihood distribution from Fig. 4 into context, we try two other methodical choices. First we calibrate in the spatial domain after reprojecting from the emulator results.

where y′(θi) are the reprojected ice sheet model results after truncation for parameter setup θ. We set the model discrepancy to twice the observational uncertainty so that the reprojected likelihood L(xy) simplifies to

Another approach is to use the net yearly sea level contribution (SLC) from the observations SLC(z(xy)) and model SLC(y′(θi)) for calibration, as done in e.g. Ritz et al. (2015).

Again, we set the model discrepancy to twice the observational uncertainty which we find from the variance of the yearly sea level contributions for the 14 biyearly satellite intervals. [mm SLE2 a−2].

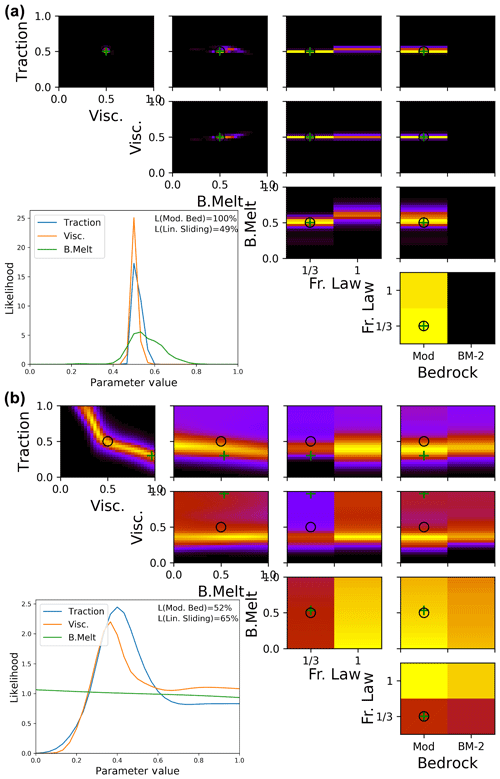

Figure 5Likelihood of parameter combinations of the synthetic test case for reprojected emulator estimates (top, a; Eq. 14) and sea level rise contribution calibration (bottom, b; Eq. 15). Upper right panels show likelihood values marginalised to pairs of parameters including basal melt factor (B. Melt) and friction law exponent (Fr. Law), normalised to the respective maximum for clarity. Lower left panel shows likelihood values marginalised to individual parameters for the three scalar parameters (line plots), as well as the friction law exponent and bedrock topography map (labelled as L(Lin. Sliding) and L(Mod. Bed) respectively), normalised to an integral of one, consistent with probability density functions. The central values for traction, viscosity, and ocean melt as well as non-linear friction and modified bedrock are used. The parameter values are also shown by the black circles, while the values of the set of parameters with highest likelihood are shown by green crosses.

Results for the synthetic model test for the calibration in (x,y) representation (Fig. 5a) show similar behaviour as for basis representation (Fig. 4) in that the friction law exponent and, to a lesser degree, basal melt are weakly constrained while the confidence in the correctly identified traction and viscosity values is even higher. Using only the net sea level rise contribution constrains the parameters weakly; it shares the limitations of not constraining the ocean melt and favouring linear friction, but, in addition, a wide range of traction–viscosity combinations perform equally well and there is no constraint on bedrock (Fig. 5b). Furthermore, the model run used as synthetic observations is not identified as the most likely setup in Fig. 5b. This demonstrates the value of the extra information – and stronger parameter constraints – provided by the use of two-dimensional observations.

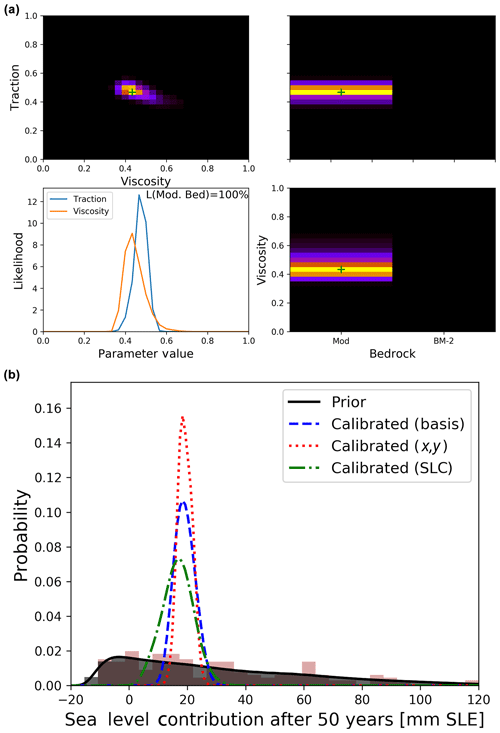

Figure 6(a) Likelihood of parameter combinations in basis representation from satellite observations (evaluations of Eq. 12). Upper right panels show likelihood values marginalised to pairs of parameters, normalised to the respective maximum for clarity. Lower left panel shows likelihood values marginalised to individual parameters for the two scalar parameters (line plots) and bedrock topography map (text and quotation within), normalised to an integral of one in the style of probability density functions. Values of the set of parameters with highest likelihood are shown by green crosses. (b) Projected sea level rise contributions at the end of model period for uncalibrated BISICLES runs (brown shades), uncalibrated emulator calls (grey shade) and different calibration approaches (coloured lines).

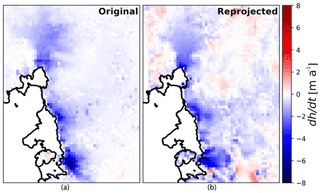

Moving on to using satellite data, the basis calibration finds that the modified bedrock from Nias et al. (2016) produces much more realistic ice thickness changes than the original Bedmap2 topography (Fig. 6a). The weighted average of basal traction and viscosity parameters are 0.47 and 0.45, respectively, which is slightly smaller than the default values (0.5). This amounts to a 3.5 % and 7.2 % reduction in amplitude compared to the optimised fields by Nias et al. (2016). While this reduction is relatively small and the central run cannot be ruled out as the optimal setup (its likelihood to be optimal is notably larger than zero), this does indicate a possible underestimation of sea level contribution by the default run. With modified bedrock, non-linear friction law, and default traction and viscosity values, the SLCs at the end of the simulation period range from 11 to 19.5 mm SLE depending on the ocean melt scaling, while the basis calibration mean SLC is 19.1 mm SLE (Table 1).

For updated probability distributions of sea level contribution after 50 years in Fig. 6b we use the calibration in basis representation (likelihood shown in Fig. 6a) as well as the reprojected (x,y) and SLC-based calibrations. The three calibration approaches are consistent (large overlap), while using the reprojection approach leads to the most narrow SLC distribution (Fig. 6b), as was indicated by the findings of Sect. 3.6. Calibration on the total sea level contribution leads to a wider distribution with the lower bound (5th percentile) being more than 6 mm SLE smaller than for the two other approaches. All of them strongly reduce uncertainties compared to the uncalibrated prior distribution, with the 90 % confidence interval width reducing to 10.9 mm SLE (basis calibration), 5.5 mm SLE (reprojected calibration) and 17.9 mm SLE (SLC calibration) from 102.9 mm SLE (uncalibrated) (Fig. 6b and Table 1). Figure 6b also shows histograms of the emulated and the original BISICLES ensembles (grey and brown shades) and illustrates how the emulation helps to overcome challenges of limited sample size.

In general, previous Antarctic ice sheet model uncertainty studies have either focused on parameter inference (Chang et al., 2016a, b; Pollard et al., 2016) or made projections that are not calibrated with observations (Schlegel et al., 2018; Bulthuis et al., 2019; Cornford et al., 2015), with the remaining probabilistic calibrated projections being based on simple (fast) models using highly aggregated observations and some relying heavily on expert judgement (Ruckert et al., 2017; Ritz et al., 2015; Little et al., 2013; Levermann et al., 2014; DeConto and Pollard, 2016; Edwards et al., 2019). Here we perform statistically founded parameter inference using spatial observations to calibrate high-resolution, grounding-line-resolving ice sheet model simulations.

The theoretical basis for most of the methodology used here has been laid out in Higdon et al. (2008), including the principal component (PC) decomposition, emulation and model calibration in the PC space. This calibration in basis representation has been adapted and tested for general circulation (climate) and ocean models (Sexton et al., 2012; Chang et al., 2014; Salter et al., 2018; Salter and Williamson, 2019). By combining this approach with a simple but robust discrepancy representation, we attempt to bridge the gap between the demanding mathematical basis and practical applications in geoscience. We compare a novel calibration of a grounding-line-resolving ice sheet model in the PC space with a reprojected calibration which assumes that the differences between the observations and calibration model are spatially uncorrelated (like e.g. Chang et al., 2016b). In comparison with studies that calibrate the total sea level contribution (e.g. Ritz et al., 2015), we are able to exploit more of the available observational information to add further constraints to the input parameters and sharpen the posterior distribution (Figs. 5 and 6b). Similar improvements should be achievable for ice sheet simulations forced by global climate model projections.

The modified bedrock removes a topographic rise near the initial grounding line of Pine Island Glacier, which could be caused by erroneous observations (Rignot et al., 2014). This rise, if present, would have a stabilising effect on the grounding line, and simulations without it can result in more than twice the sea level contribution from Pine Island Glacier for some friction laws (Nias et al., 2018). Here we find the modified bedrock topography to produce a spatial response far more consistent with observed ice thickness changes than for the original Bedmap2 bedrock (Fig. 6a). The modified bedrock has been derived by reducing clearly unrealistic behaviour of the same ice sheet model; a better calibration performance was therefore to be expected. However, no satellite observations have been used for the bedrock modification in Nias et al. (2016), nor has there been a quantitative probabilistic assessment.

The non-spatial calibration on total sea level contribution alone cannot distinguish between the two bedrocks (Fig. 5b). Simulations for this region based on Bedmap2, calibrated on the SLC, are likely to either be compensating the overly stabilising bedrock with underestimated viscosity and/or traction coefficients or underestimating the sea level contribution altogether. In addition to the unconstrained bedrock, the SLC calibration permits a wide range of traction and viscosity coefficients, including values far from the correct test values (Fig. 5b). This shows that the SLC calibration permits more model runs which are right for the wrong reasons; they have approximately the right sea level rise contribution in the calibration period but can still be poor representations of the current state of the ice sheet.

The extremely small area of likely input parameters for the reprojected (x,y) calibration (Fig. 5a and Supplement) could indicate overconfidence in the retrieved parameter values, but it could also mean that the available information is exploited more efficiently. Using subsections of the calibration period has a small impact on basis and SLC calibrations. However, for one of the sub-periods with reprojected calibration, the probability interval does not overlap with the results of the whole 7-year calibration period (Table S1 in the Supplement). Since the sub-period is part of the 7-year period we would expect the results to be non-contradictory, indicating that the probability intervals are too narrow and hence the approach, as implemented here, is overconfident. The different ways of handling model discrepancy influence the width of the probability intervals.

The average sea level contribution from the observations used here is 0.36 mm SLE a−1, consistent with estimates form McMillan et al. (2014) of 0.33±0.05 mm SLE a−1 for the Amundsen Sea Embayment from 2010 to 2013. Calibrated rates in the beginning of the model period are very similar (0.335, 0.327 and 0.363 mm SLE a−1 for basis, (x,y) and SLC calibration, respectively). For (x,y) and basis calibration the rates increase over the 50-year period, while the rate of mass loss reduces for the SLC calibration (50-year average SLC rates: 0.382, 0.384 and 0.336 mm SLE a−1 for basis, (x,y) and SLC calibration, respectively). The fact that the SLC calibration starts with the largest rates of sea level contribution but is the only approach seeing a reduction in those rates, in combination with the above-mentioned suspicion of it allowing unrealistic setups, raises questions about how reliable calibrations on total sea level contribution alone are.

The ice sheet model data used here are not based on a specific climate scenario but instead project the state of the ice sheet under current conditions into the future (with imposed perturbations). Holland et al. (2019) suggest a link between anthropogenic greenhouse gas emissions and increased upwelling of warm circumpolar deep water, facilitating melt at the base of Amundsen sea ice shelves. This would imply a positive, climate-scenario-dependent trend of ocean melt for the model period, superimposed by strong decadal variability (Holland et al., 2019; Jenkins et al., 2016, 2018). Warmer ocean and air temperatures would enhance melt and accelerate the dynamic response. Neither do the simulations used here carry the countervailing predicted increase of surface accumulation in a warmer climate (Lenaerts et al., 2016). Edwards et al. (2019) and Golledge et al. (2019) find that the Antarctic ice sheet response to very different greenhouse gas emission scenarios starts to diverge from around 2060–2070, while Yu et al. (2018) find ocean melt to have a negligible impact for the first 30 years for their simulations of Thwaites Glacier. Combined, this indicates that climate scenarios would have a small net impact on 50-year simulations.

Relating climate scenarios to local ice shelf melt rates is associated with deep uncertainties itself. CMIP5 climate models are inconsistent in predicting Antarctic shelf water temperatures so that the model choice can make a substantial (>50 %) difference in the increase of ocean melt by 2100 for the ASE (Naughten et al., 2018). Melt parameterisations, linking water temperature and salinity to ice melt rates, can add variations of another 50 % in total melt rate for the same ocean conditions (Favier et al., 2019). The location of ocean melt can be as important as the integrated melt of an ice shelf (Goldberg et al., 2019). The treatment of melt on partially floating grid cells further impacts ice sheet models significantly, even for fine spatial resolutions of 300 m (Yu et al., 2018). It is therefore very challenging to make robust climate-scenario-dependent ice sheet model predictions. Instead we use simulations of the current state of the ASE for a well defined set of assumptions for which climate forcing uncertainty is simply represented by a halving to doubling in ocean melt. The method presented here can be applied to forced simulations which would benefit from reduced uncertainty intervals to highlight the impact of climate change on ice sheet models.

The truncation of a principal component decomposition can cause or worsen problems related to the observations not being in the analysed model output space (see difference in Fig. 3). This can mean that there is no parameter configuration θ which is a good representation of the observations. Basis rotations have been proposed to reduce this problem (Salter et al., 2018); however, here we use only the portions of the observations which can be represented in the reduced PC space (Fig. 3b) and argue that configurations which are able to reproduce those portions are likely to be better general representations than those configurations which cannot. We further include a discrepancy variance for each PC to account for systematic observation–model differences, including PC truncation effects, and perform an initial history matching to ensure the observations are reasonable close to model results.

The model perturbation has been done by amplitude scaling of the optimised input fields alone; other types of variations to the basal traction coefficient fields could potentially produce model setups with better agreement to the observations (Petra et al., 2014; Isaac et al., 2015). However, computational and methodological challenges make simple scaling approaches more feasible, and the use of a published dataset bars us from testing additional types of perturbations. Emulation helps to improve the sampling of the scaling parameters but does not change the fact that we cannot assess the quality of types of perturbation which are not covered by the ice sheet model.

It should also be noted that for a given ice geometry the surface speed (used for initialisation) and ice thickness change (used for calibration) are not fully independent (conversation of mass). Finding the unperturbed traction and viscosity fields to show good agreement with ice thickness change observations is not surprising, yet it is a good test of the initialisation process, initialisation data and the quality of the initial ice geometry. For the same reasons, the optimised fields cannot be considered without uncertainty. This uncertainty can be quantified by ice thickness change observations, as has been shown here. A combined temporal and spatial calibration could help to use even more of the available information captured by observations in regions like the ASE where dynamic changes in the ice sheet took place within the observation period. The temporal component could in particular help to constrain the basal friction law exponent and ocean melt scaling.

We present probabilistic estimates of the dynamic contribution to sea level of unforced 50-year simulations of the Amundsen Sea Embayment in West Antarctica from a grounding-line-resolving ice sheet model. The Bayesian calibration of a published ice sheet model ensemble with satellite estimates of changes in ice thickness from 2008 to 2015 involves spatial decomposition to increase the amount of available information from the observations and emulation techniques to search the parameter space more thoroughly.

The calibration has been tested on synthetic test cases and can reliably constrain the bedrock, basal traction and ice viscosity amplitudes. Identifying the most successful basal friction law and ocean melt rate is more challenging, and interference of those parameters could benefit from a temporally resolved calibration approach and a longer calibration period. The use of net sea level contribution alone allows a wide range of parameter setups, which share the initial net mass loss. This ambiguity (weak constraint) also results in relatively wide sea level contribution probability distributions. The extra information from the use of two-dimensional calibrations adds stronger parameter constraints, showing that this method has the potential to reduce uncertainties in ice sheet model projections. We compare and discuss spatial calibrations in both basis and reprojected representation.

Using satellite observations we find the modified bedrock topography derived by Nias et al. (2016) to result in a quantitatively far more consistent model representation of the Amundsen Sea Embayment than Bedmap2. Imposing no climate forcing, the calibrated 50-year Amundsen Sea Embayment simulations contribute 18.4 [13.9, 24.8] mm SLE (most likely value and 90 % probability interval) to global mean sea level. Compared to prior estimates, these calibrated values constitute a drastic reduction in uncertainty by nearly 90 %.

Code can be accessed at https://github.com/Andreas948 (Wernecke, 2020).

The supplement related to this article is available online at: https://doi.org/10.5194/tc-14-1459-2020-supplement.

AW led this study, with TLE, PBH and NRE giving valuable advice on the study design and IJN on the model data processing and interpretation. All authors contributed to the interpretation of the study results. AW prepared the initial manuscript with contributions from all co-authors.

The authors declare that they have no conflict of interest.

We would like to thank Hannes Konrad for sharing and advising on the satellite observations and Mark Brandon for general advice. We also thank the anonymous reviewers which helped to significantly improve this work.

This research has been supported by the EPSRC Research of Variability and Environmental Risk (ReCoVER: EP/M008495/1) under the Quantifying Uncertainty in Antarctic Ice Sheet Instability (QUAntIS) project (RFFLP 006) (via the work of Tamsin L. Edwards, Neil R. Edwards and Philip B. Holden). It has further been supported by LC3M, a Leverhulme Trust Research Centre Award (RC-2015-029) (via the work of Neil R. Edwards and Philip B. Holden); The Open University Faculty of Science, Technology, Engineering and Mathematics; and the University of Bristol Advanced Computing Research Centre (via the work of Andreas Wernecke).

This paper was edited by Olaf Eisen and reviewed by four anonymous referees.

Arthern, R. J. and Williams, C. R.: The sensitivity of West Antarctica to the submarine melting feedback, Geophys. Res. Lett., 44, 2352–2359, https://doi.org/10.1002/2017GL072514, 2017. a

Bamber, J. L., Westaway, R. M., Marzeion, B., and Wouters, B.: The land ice contribution to sea level during the satellite era, Environ. Res. Lett., 13, 063008, https://doi.org/10.1088/1748-9326/aac2f0, 2018. a

Brondex, J., Gillet-Chaulet, F., and Gagliardini, O.: Sensitivity of centennial mass loss projections of the Amundsen basin to the friction law, The Cryosphere, 13, 177–195, https://doi.org/10.5194/tc-13-177-2019, 2019. a

Bulthuis, K., Arnst, M., Sun, S., and Pattyn, F.: Uncertainty quantification of the multi-centennial response of the Antarctic ice sheet to climate change, The Cryosphere, 13, 1349–1380, https://doi.org/10.5194/tc-13-1349-2019, 2019. a, b, c

Chang, W., Haran, M., Olson, R., and Keller, K.: Fast dimension-reduced climate model calibration and the effect of data aggregation, Ann. Appl. Stat., 8, 649–673, https://doi.org/10.1214/14-AOAS733, 2014. a, b, c, d, e

Chang, W., Haran, M., Applegate, P., and Pollard, D.: Calibrating an ice sheet model using high-dimensional binary spatial data, J. Am. Stat. Assoc., 111, 57–72, https://doi.org/10.1080/01621459.2015.1108199, 2016a. a, b, c, d, e, f

Chang, W., Haran, M., Applegate, P., and Pollard, D.: Improving ice sheet model calibration using paleoclimate and modern data, Ann. Appl. Stat., 10, 2274–2302, https://doi.org/10.1214/16-AOAS979, 2016b. a, b, c, d, e, f

Church, J. A., Clark, P. U., Cazenave, A., Gregory, J. M., Jevrejeva, S., Levermann, A., Merrifield, M. A., Milne, G. A., Nerem, R. S., Nunn, P. D., Payne, A. J., Pfeffer, W. T., Stammer, D., and Unnikrishnan, A. S.: Sea level change, Tech. rep., PM Cambridge University Press, 2013. a

Cornford, S. L., Martin, D. F., Graves, D. T., Ranken, D. F., Le Brocq, A. M., Gladstone, R. M., Payne, A. J., Ng, E. G., and Lipscomb, W. H.: Adaptive mesh, finite volume modeling of marine ice sheets, J. Comput. Phys., 232, 529–549, https://doi.org/10.1016/j.jcp.2012.08.037, 2013. a

Cornford, S. L., Martin, D. F., Payne, A. J., Ng, E. G., Le Brocq, A. M., Gladstone, R. M., Edwards, T. L., Shannon, S. R., Agosta, C., van den Broeke, M. R., Hellmer, H. H., Krinner, G., Ligtenberg, S. R. M., Timmermann, R., and Vaughan, D. G.: Century-scale simulations of the response of the West Antarctic Ice Sheet to a warming climate, The Cryosphere, 9, 1579–1600, https://doi.org/10.5194/tc-9-1579-2015, 2015. a, b

DeConto, R. M. and Pollard, D.: Contribution of Antarctica to past and future sea-level rise, Nature, 531, 591–597, https://doi.org/10.1038/nature17145, 2016. a, b, c, d, e

Edwards, T. L., Brandon, M. A., Durand, G., Edwards, N. R., Golledge, N. R., Holden, P. B., Nias, I. J., Payne, A. J., Ritz, C., and Wernecke, A.: Revisiting Antarctic ice loss due to marine ice-cliff instability, Nature, 566, 58–64, https://doi.org/10.1038/s41586-019-0901-4, 2019. a, b, c, d, e, f, g

Favier, L., Jourdain, N. C., Jenkins, A., Merino, N., Durand, G., Gagliardini, O., Gillet-Chaulet, F., and Mathiot, P.: Assessment of sub-shelf melting parameterisations using the ocean–ice-sheet coupled model NEMO(v3.6)–Elmer/Ice(v8.3) , Geosci. Model Dev., 12, 2255–2283, https://doi.org/10.5194/gmd-12-2255-2019, 2019. a

Fretwell, P., Pritchard, H. D., Vaughan, D. G., Bamber, J. L., Barrand, N. E., Bell, R., Bianchi, C., Bingham, R. G., Blankenship, D. D., Casassa, G., Catania, G., Callens, D., Conway, H., Cook, A. J., Corr, H. F. J., Damaske, D., Damm, V., Ferraccioli, F., Forsberg, R., Fujita, S., Gim, Y., Gogineni, P., Griggs, J. A., Hindmarsh, R. C. A., Holmlund, P., Holt, J. W., Jacobel, R. W., Jenkins, A., Jokat, W., Jordan, T., King, E. C., Kohler, J., Krabill, W., Riger-Kusk, M., Langley, K. A., Leitchenkov, G., Leuschen, C., Luyendyk, B. P., Matsuoka, K., Mouginot, J., Nitsche, F. O., Nogi, Y., Nost, O. A., Popov, S. V., Rignot, E., Rippin, D. M., Rivera, A., Roberts, J., Ross, N., Siegert, M. J., Smith, A. M., Steinhage, D., Studinger, M., Sun, B., Tinto, B. K., Welch, B. C., Wilson, D., Young, D. A., Xiangbin, C., and Zirizzotti, A.: Bedmap2: improved ice bed, surface and thickness datasets for Antarctica, The Cryosphere, 7, 375–393, https://doi.org/10.5194/tc-7-375-2013, 2013. a

Gillet-Chaulet, F., Durand, G., Gagliardini, O., Mosbeux, C., Mouginot, J., Rémy, F., and Ritz, C.: Assimilation of surface velocities acquired between 1996 and 2010 to constrain the form of the basal friction law under Pine Island Glacier, Geophys. Res. Lett., 43, 10–311, https://doi.org/10.1002/2016GL069937, 2016. a

Gladstone, R. M., Lee, V., Rougier, J., Payne, A. J., Hellmer, H., Le Brocq, A., Shepherd, A., Edwards, T. L., Gregory, J., and Cornford, S. L.: Calibrated prediction of Pine Island Glacier retreat during the 21st and 22nd centuries with a coupled flowline model, Earth Planet. Sc. Lett., 333, 191–199, https://doi.org/10.1016/j.epsl.2012.04.022, 2012. a

Goldberg, D., Gourmelen, N., Kimura, S., Millan, R., and Snow, K.: How accurately should we model ice shelf melt rates?, Geophys. Res. Lett., 46, 189–199, https://doi.org/10.1029/2018GL080383, 2019. a

Golledge, N. R., Keller, E. D., Gomez, N., Naughten, K. A., Bernales, J., Trusel, L. D., and Edwards, T. L.: Global environmental consequences of twenty-first-century ice-sheet melt, Nature, 566, 65–72, https://doi.org/10.1038/s41586-019-0889-9, 2019. a

Habermann, M., Maxwell, D., and Truffer, M.: Reconstruction of basal properties in ice sheets using iterative inverse methods, J. Glaciol., 58, 795–808, https://doi.org/10.3189/2012JoG11J168, 2012. a

Higdon, D., Gattiker, J., Williams, B., and Rightley, M.: Computer model calibration using high-dimensional output, J. Am. Stat. Assoc., 103, 570–583, https://doi.org/10.1198/016214507000000888, 2008. a, b, c, d, e

Holden, P. B., Edwards, N., Oliver, K., Lenton, T., and Wilkinson, R.: A probabilistic calibration of climate sensitivity and terrestrial carbon change in GENIE-1, Clim. Dynam., 35, 785–806, https://doi.org/10.1007/s00382-009-0630-8, 2010. a

Holden, P. B., Edwards, N. R., Garthwaite, P. H., and Wilkinson, R. D.: Emulation and interpretation of high-dimensional climate model outputs, J. Appl. Stat., 42, 2038–2055, https://doi.org/10.1080/02664763.2015.1016412, 2015. a

Holland, P. R., Bracegirdle, T. J., Dutrieux, P., Jenkins, A., and Steig, E. J.: West Antarctic ice loss influenced by internal climate variability and anthropogenic forcing, Nat. Geosci., 12, 718–724, https://doi.org/10.1038/s41561-019-0420-9, 2019. a, b

Isaac, T., Petra, N., Stadler, G., and Ghattas, O.: Scalable and efficient algorithms for the propagation of uncertainty from data through inference to prediction for large-scale problems, with application to flow of the Antarctic ice sheet, J. Comput. Phys., 296, 348–368, https://doi.org/10.1016/j.jcp.2015.04.047, 2015. a

Jenkins, A., Dutrieux, P., Jacobs, S., Steig, E. J., Gudmundsson, G. H., Smith, J., and Heywood, K. J.: Decadal ocean forcing and Antarctic ice sheet response: Lessons from the Amundsen Sea, Oceanography, 29, 106–117, 2016. a

Jenkins, A., Shoosmith, D., Dutrieux, P., Jacobs, S., Kim, T. W., Lee, S. H., Ha, H. K., and Stammerjohn, S.: West Antarctic Ice Sheet retreat in the Amundsen Sea driven by decadal oceanic variability, Nat. Geosci., 11, 733–738, 2018. a, b

Joughin, I., Tulaczyk, S., Bamber, J. L., Blankenship, D., Holt, J. W., Scambos, T., and Vaughan, D. G.: Basal conditions for Pine Island and Thwaites Glaciers, West Antarctica, determined using satellite and airborne data, J. Glaciol., 55, 245–257, https://doi.org/10.3189/002214309788608705, 2009. a

Joughin, I., Smith, B. E., and Schoof, C. G.: Regularized Coulomb friction laws for ice sheet sliding: Application to Pine Island Glacier, Antarctica, Geophys. Res. Lett., 46, 4764–4771, https://doi.org/10.1029/2019GL082526, 2019. a, b

Kennedy, M. C. and O'Hagan, A.: Bayesian calibration of computer models, J. Roy. Stat. Soc. B Met., 63, 425–464, https://doi.org/10.1111/1467-9868.00294, 2001. a, b, c

Khazendar, A., Rignot, E., Schroeder, D. M., Seroussi, H., Schodlok, M. P., Scheuchl, B., Mouginot, J., Sutterley, T. C., and Velicogna, I.: Rapid submarine ice melting in the grounding zones of ice shelves in West Antarctica, Nat. Commun., 7, 13243, https://doi.org/10.1038/ncomms13243, 2016. a

Konrad, H., Gilbert, L., Cornford, S. L., Payne, A., Hogg, A., Muir, A., and Shepherd, A.: Uneven onset and pace of ice-dynamical imbalance in the Amundsen Sea Embayment, West Antarctica, Geophys. Res. Lett., 44, 910–918, https://doi.org/10.1002/2016GL070733, 2017. a, b

Lenaerts, J. T., Vizcaino, M., Fyke, J., Van Kampenhout, L., and van den Broeke, M. R.: Present-day and future Antarctic ice sheet climate and surface mass balance in the Community Earth System Model, Clim. Dynam., 47, 1367–1381, https://doi.org/10.1007/s00382-015-2907-4, 2016. a

Levermann, A., Winkelmann, R., Nowicki, S., Fastook, J. L., Frieler, K., Greve, R., Hellmer, H. H., Martin, M. A., Meinshausen, M., Mengel, M., Payne, A. J., Pollard, D., Sato, T., Timmermann, R., Wang, W. L., and Bindschadler, R. A.: Projecting Antarctic ice discharge using response functions from SeaRISE ice-sheet models, Earth Syst. Dynam., 5, 271–293, https://doi.org/10.5194/esd-5-271-2014, 2014. a, b

Little, C. M., Oppenheimer, M., and Urban, N. M.: Upper bounds on twenty-first-century Antarctic ice loss assessed using a probabilistic framework, Nat. Clim. Change, 3, 654–659, https://doi.org/10.1038/nclimate1845, 2013. a

MacAyeal, D. R., Bindschadler, R. A., and Scambos, T. A.: Basal friction of ice stream E, West Antarctica, J. Glaciol., 41, 247–262, https://doi.org/10.3189/S0022143000016154, 1995. a

McMillan, M., Shepherd, A., Sundal, A., Briggs, K., Muir, A., Ridout, A., Hogg, A., and Wingham, D.: Increased ice losses from Antarctica detected by CryoSat-2, Geophys. Res. Lett., 41, 3899–3905, https://doi.org/10.1002/2014GL060111, 2014. a, b, c

Naughten, K. A., Meissner, K. J., Galton-Fenzi, B. K., England, M. H., Timmermann, R., and Hellmer, H. H.: Future projections of Antarctic ice shelf melting based on CMIP5 scenarios, J. Climate, 31, 5243–5261, 2018. a

Nias, I., Cornford, S., and Payne, A.: New mass-conserving bedrock topography for Pine Island Glacier impacts simulated decadal rates of mass loss, Geophys. Res. Lett., 45, 3173–3181, https://doi.org/10.1002/2017GL076493, 2018. a

Nias, I., Cornford, S., Edwards, T., Gourmelen, N., and Payne, A.: Assessing uncertainty in the dynamical ice response to ocean warming in the Amundsen Sea Embayment, West Antarctica, Geophys. Res. Lett., 46, 11253–11260, https://doi.org/10.1029/2019GL084941, 2019. a

Nias, I. J., Cornford, S. L., and Payne, A. J.: Contrasting the modelled sensitivity of the Amundsen Sea Embayment ice streams, J. Glaciol., 62, 552–562, https://doi.org/10.1017/jog.2016.40, 2016. a, b, c, d, e, f, g, h, i, j, k, l, m, n, o, p

O'Hagan, A.: Bayesian analysis of computer code outputs: A tutorial, Reliab. Eng. Syst. Safe., 91, 1290–1300, https://doi.org/10.1016/j.ress.2005.11.025, 2006. a

Pattyn, F.: The paradigm shift in Antarctic ice sheet modelling, Nat. Commun., 9, 2728, https://doi.org/10.1038/s41467-018-05003-z, 2018. a

Pattyn, F., Favier, L., Sun, S., and Durand, G.: Progress in numerical modeling of Antarctic ice-sheet dynamics, Current Climate Change Reports, 3, 174–184, https://doi.org/10.1007/s40641-017-0069-7, 2017. a

Petra, N., Martin, J., Stadler, G., and Ghattas, O.: A computational framework for infinite-dimensional Bayesian inverse problems, Part II: Stochastic Newton MCMC with application to ice sheet flow inverse problems, SIAM J. Sci. Comput., 36, A1525–A1555, https://doi.org/10.1137/130934805, 2014. a

Pollard, D., Chang, W., Haran, M., Applegate, P., and DeConto, R.: Large ensemble modeling of the last deglacial retreat of the West Antarctic Ice Sheet: comparison of simple and advanced statistical techniques, Geosci. Model Dev., 9, 1697–1723, https://doi.org/10.5194/gmd-9-1697-2016, 2016. a, b

Pritchard, H., Ligtenberg, S. R., Fricker, H. A., Vaughan, D. G., van den Broeke, M. R., and Padman, L.: Antarctic ice-sheet loss driven by basal melting of ice shelves, Nature, 484, 502–505, https://doi.org/10.1038/nature10968, 2012. a

Pukelsheim, F.: The Three Sigma Rule, Am. Stat., 48, 88–91, https://doi.org/10.1080/00031305.1994.10476030, 1994. a

Rasmussen, C. E. and Williams, C. K.: Gaussian processes for machine learning, vol. 2, MIT Press Cambridge, MA, 2006. a, b

Rignot, E., Mouginot, J., and Scheuchl, B.: Ice flow of the Antarctic ice sheet, Science, 333, 1427–1430, https://doi.org/10.1126/science.1208336, 2011. a, b

Rignot, E., Mouginot, J., Morlighem, M., Seroussi, H., and Scheuchl, B.: Widespread, rapid grounding line retreat of Pine Island, Thwaites, Smith, and Kohler glaciers, West Antarctica, from 1992 to 2011, Geophys. Res. Lett., 41, 3502–3509, https://doi.org/10.1002/2014GL060140, 2014. a

Ritz, C., Edwards, T. L., Durand, G., Payne, A. J., Peyaud, V., and Hindmarsh, R. C.: Potential sea-level rise from Antarctic ice-sheet instability constrained by observations, Nature, 528, 115–118, https://doi.org/10.1038/nature16147, 2015. a, b, c, d, e, f

Ruckert, K. L., Shaffer, G., Pollard, D., Guan, Y., Wong, T. E., Forest, C. E., and Keller, K.: Assessing the impact of retreat mechanisms in a simple Antarctic ice sheet model using Bayesian calibration, PLoS One, 12, e0170052, https://doi.org/10.1371/journal.pone.0170052, 2017. a

Salter, J. M. and Williamson, D. B.: Efficient calibration for high-dimensional computer model output using basis methods, arXiv preprint, arXiv:1906.05758, 2019. a

Salter, J. M., Williamson, D. B., Scinocca, J., and Kharin, V.: Uncertainty quantification for computer models with spatial output using calibration-optimal bases, J. Am. Stat. Assoc., 114, 1800–1814, https://doi.org/10.1080/01621459.2018.1514306, 2018. a, b, c, d, e

Schlegel, N.-J., Seroussi, H., Schodlok, M. P., Larour, E. Y., Boening, C., Limonadi, D., Watkins, M. M., Morlighem, M., and van den Broeke, M. R.: Exploration of Antarctic Ice Sheet 100-year contribution to sea level rise and associated model uncertainties using the ISSM framework, The Cryosphere, 12, 3511–3534, https://doi.org/10.5194/tc-12-3511-2018, 2018. a, b

Schoof, C. and Hindmarsh, R. C.: Thin-film flows with wall slip: an asymptotic analysis of higher order glacier flow models, Q. J. Mech. Appl. Math., 63, 73–114, 2010. a

Sexton, D. M., Murphy, J. M., Collins, M., and Webb, M. J.: Multivariate probabilistic projections using imperfect climate models part I: outline of methodology, Clim. Dynam., 38, 2513–2542, https://doi.org/10.1007/s00382-011-1208-9, 2012. a, b

Shepherd, A., Ivins, E., Rignot, E., Smith, B., Van Den Broeke, M., Velicogna, I., Whitehouse, P., Briggs, K., Joughin, I., Krinner, G., , Nowicki, S., Payne, T., Scambos, T., Schlegel, N., Geruo, A., Agosta, C., Ahlstrom, A., Bobonis, G., Barletta, V., Blazquez, A., Bonin, J., Csatho, B., Cullather, R., Felikson, D., Fettweis, X., Forsberg, R., Gallee, H., Gardner, A., Gilbert, L., Groh, A., Gunter, B., Hanna, E., Harig, C., Helm, V., Horvath, A., Horwath, M., Khan, S., Kjeldsen, K. K., Konrad, H., Langen, P., Lecavalier, B., Loomis, B., Luthcke, S., McMillan, M., Melini, D., Mernild, S., Mohajerani, Y., Moore, P., Mouginot, J., Moyano, G., Muir, A., Nagler, T., Nield, G., Nilsson, J., Noel, B., Otosaka, I., Pattle, M. E., Peltier, W. R., Pie, N., Bietbroek, R., Rott, H., Sandberg-Sorensen, L., Sasgen, I., Save, H., Scheuchl, B., Schrama, E., Schroder, L., Seo, K. W., Simonsen, S., Slater, T., Spada, G., Sutterley, T., Talpe, M., Tarasov, L., Van de Berg, W., Van der Wal, W., Van Wessem, M., Vishwakarma, B., Wiese, D., Wouters, B: Mass balance of the Antarctic Ice Sheet from 1992 to 2017, Nature, 558, 219–222, https://doi.org/10.1038/s41586-018-0179-y, 2018. a

Vernon, I., Goldstein, M., and Bower, R. G.: Galaxy formation: a Bayesian uncertainty analysis, Bayesian Anal., 5, 619–669, https://doi.org/10.1214/10-BA524, 2010. a

Wernecke, A.: Spatial probabilistic calibration for ice sheet model data, GitHub, available at: https://github.com/Andreas948, 2020. a

Williamson, D. B., Blaker, A. T., and Sinha, B.: Tuning without over-tuning: parametric uncertainty quantification for the NEMO ocean model, Geosci. Model Dev., 10, 1789–1816, https://doi.org/10.5194/gmd-10-1789-2017, 2017. a

Yu, H., Rignot, E., Seroussi, H., and Morlighem, M.: Retreat of Thwaites Glacier, West Antarctica, over the next 100 years using various ice flow models, ice shelf melt scenarios and basal friction laws, The Cryosphere, 12, 3861–3876, https://doi.org/10.5194/tc-12-3861-2018, 2018. a, b